Let’s kick off with a question. Have you ever shared a secret? It could be your best friend’s crush, a cherished family recipe, or maybe some spicy gossip. We’ve all been there at some point. You might find this familiar – that moment right before you spill the beans, when you hesitate, pondering whether it’s right to share. That’s your moral compass doing its thing 🧭. Now, picture standing on the edge of revealing not just a single secret but a torrent of them, secrets that could touch millions of lives. Welcome to the daring world of data publication.

Just like that brief pause when you’re about to share a secret, the final stage of a data project—publication—brings along its own bundle of ethical concerns. What we choose to share, how we share it, who gets to see it, and the potential misuse – these are all significant considerations. It’s not just about juggling 0s and 1s; it’s about managing people’s lives, their livelihoods, and their personal info 🔐.

Why Should We Care About Data Ethics?

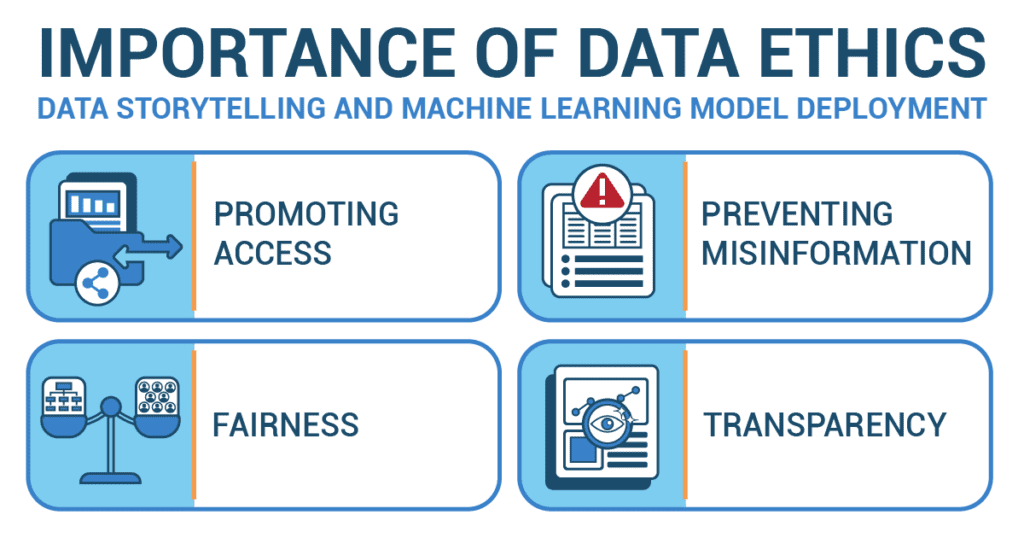

When we share our findings—just like when we share a secret—it’s crucial to think about how we do it. That’s data ethics! It involves promoting access, preventing misinformation, ensuring fairness, and offering transparency.

Imagine your friends couldn’t come to the school play. You’d want to describe it to them in a way they can understand, right? That’s promoting access. This includes making sure the results of the project are accessible to those it may impact and are presented in a way that is understandable and transparent.

But what if you accidentally tell them something that didn’t happen, like the lead actor forgetting their lines when they didn’t? That’s misinformation. Misinterpreted or misrepresented results can lead to false conclusions, and these inaccuracies can influence decision-making at all levels, from individual decisions to government policy.

What if your little sister was in the play, and you only talk about her performance and ignore everyone else? That’s not fair. Similarly, we need to ensure that when we use our data to make decisions, it doesn’t harm or favor specific groups. The algorithms used in machine learning models can have built-in biases. These biases can perpetuate systemic inequities and have real-world negative impacts, especially in sensitive areas like credit scoring, job recruiting, and predictive policing.

Finally, you should tell your friends how you remember every scene from the play. That’s being transparent. When we explain how we analyzed our data, others can trust our findings and even repeat our work if they want to. Results and models should be open to scrutiny and discussion, and there should be a mechanism to address any ethical issues that arise after deployment.

How Do We Apply Data Ethics?

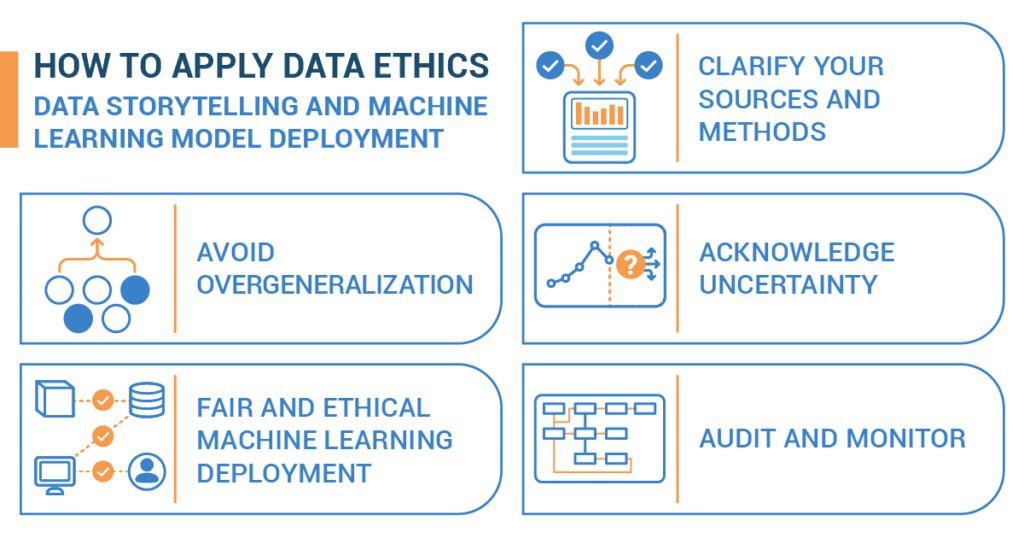

When you’re presenting your findings, here’s what you should do:

Clarify your sources and methods: Clearly explain the origins of your data, how it was collected, and how it was processed. Apply the principle of transparency by being open about the limitations and potential biases in your data and methods. Ensure that the methods and techniques used in the analysis are communicated clearly. The audience should understand the strengths and limitations of your data and models. As part of the AI HLEG Ethics Guidelines for Trustworthy AI, transparency is fundamental. It’s like giving them a behind-the-scenes tour of your detective work!

Avoid overgeneralization: Don’t make your data sound more convincing than it really is. If you surveyed ten students about their favorite ice cream flavor, don’t claim to know the favorite flavor of all the students in your school. This principle of honesty ensures that your audience isn’t misled by the results. While making your findings accessible, be careful not to oversimplify to the point of distortion. It’s crucial to balance accessibility and accuracy. The American Statistical Association’s Ethical Guidelines for Statistical Practice highlight this principle.

Acknowledge uncertainty: Remember, there’s always a chance your findings might not be 100% correct. Like guessing the mystery flavor of a jelly bean, sometimes you might be right on the nose, other times not so much. It’s important to share this uncertainty with your audience. If you’ve built a machine learning model, like a program that predicts the winner of the school’s basketball games based on past scores, there are additional considerations:

Fair and ethical machine learning deployment: Make sure your model respects everyone’s privacy. Also, it should not favor any specific group, like predicting that one team will always lose because they’ve lost a few times before. Algorithmic bias can occur when models are built on data that was bias or unfair, so it is critical to carefully built and monitor your modelings. When deploying a machine learning model, ensure that it respects the norms of the context in which it will operate, as per Nissenbaum’s framework of privacy as contextual integrity.

Audit and monitor: Keep checking your model to ensure it works as expected. Even after you’ve finished creating it, it’s important to continue watching its performance, just like a coach monitoring their team during a game. The Institute of Electrical and Electronics Engineers (IEEE) is the world’s largest professional association dedicated to advancing technological innovation and excellence for the benefit of humanity. The IEEE’s Ethically Aligned Design framework emphasizes the importance of this ongoing commitment to auditing and monitoring.

Watch Out for These Data Ethics Traps!

Just like every detective story has its twists and turns, working with data can sometimes lead us into a few traps. But don’t worry, I’ve got your back! Here are some common data ethics mistakes and how to avoid them.

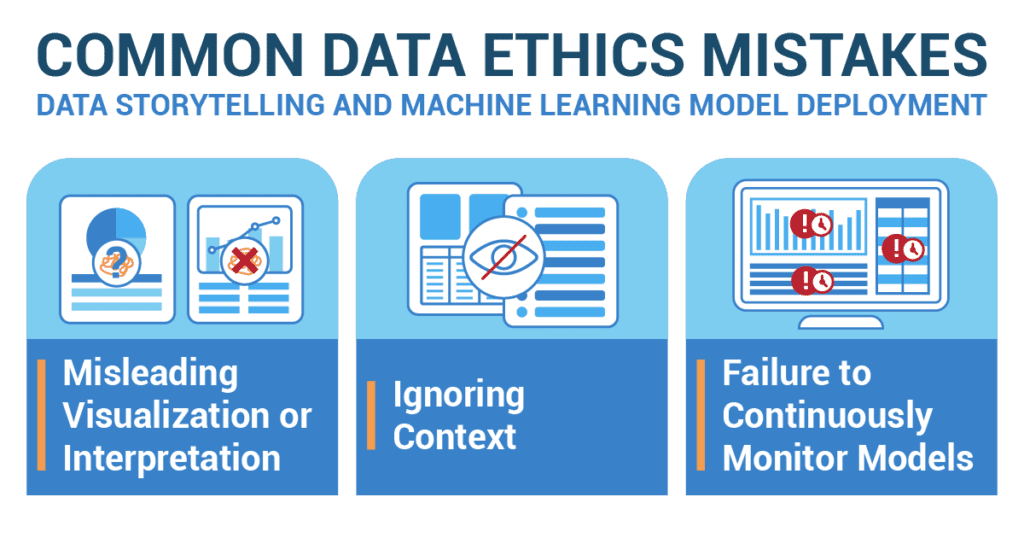

Misleading visualization or interpretation: Let’s say you’ve created a bar graph showing the number of pizzas sold in your school cafeteria. If one bar is way taller than others, it might look like that pizza is super popular. But what if that bar represents a whole month’s sales while others represent just a week? That’s misleading! To avoid this, always follow good practices in making charts and check your work for possible misunderstandings.

Ignoring context: Imagine you have a model that predicts the best time to hold band practice based on when the band room is usually empty. That model might not work for the choir, which might need the room at different times. Before using your model in a new situation, make sure you understand the differences and adjust your model accordingly.

Failure to continuously monitor models: Let’s go back to the basketball game prediction model. Suppose your model was trained on data from games when your school’s star player was always playing. If that player graduates, your model might start making wrong predictions. That’s why it’s important to keep checking your model to ensure it still works well. Set up automatic systems, if you can, to keep an eye on your model’s performance and impacts.

Remember, becoming a great data detective takes practice. But as long as you stay aware of these potential pitfalls and work to avoid them, you’ll be well on your way!

That’s it, budding data detectives! Remember, with great data comes great responsibility. Use your skills to not only unravel the mysteries in the data but also share your findings responsibly. Happy investigating!

Star Gazer Lily: A Journey to Ethical Data Publication

Lily collected her data from an array of public astronomy databases, meticulously recording the date, duration, and intensity of each meteor shower. Once she had crunched the numbers and run her analysis, she found an interesting pattern: meteor shower activity was gradually increasing year after year.

Excited by her findings, Lily prepared to share her project with her classmates. However, Lily realized that how she presented her findings was just as important as the findings themselves. She was about to step into the realm of data ethics.

Lily knew that her results should be accessible and understandable to her classmates, some of whom weren’t as star-struck as she was. She carefully crafted simple and clear visuals, ensuring her charts didn’t distort the data or misrepresent her findings. She also provided a plain-language summary, making sure her classmates could grasp the trend without feeling overwhelmed by astronomical jargon.

Misinformation, Lily realized, was a big no-no. She double-checked her visuals for any potentially misleading interpretations and made certain to communicate the limitations of her project. Lily clarified that while her analysis suggested an increase in meteor shower activity, this was based on the last decade’s data and might not hold true for the future.

In her presentation, Lily was careful to respect the principle of fairness. While her project didn’t directly involve sensitive personal data or machine learning models, she acknowledged that scientific data, if mishandled, could lead to unfair outcomes. For instance, misinterpreted meteor shower data could unnecessarily alarm people or lead to skewed resource allocation in meteor tracking.

Finally, Lily demonstrated the value of transparency. She meticulously explained her sources, methods, and even potential biases. She pointed out that her data came from public databases, which might contain observational errors, and that her statistical methods, while rigorous, couldn’t account for every variable in the complex cosmos.

The day arrived for Lily to present her project. Her classmates listened, learned, and asked questions. They discussed not just meteor showers, but also the importance of data ethics when sharing findings. After the presentation, many classmates complimented Lily not only on her analysis but also on her clear, accessible, and responsible presentation of her findings.

Lily’s project was not just a journey through the stars but also a journey through data ethics. By considering accessibility, preventing misinformation, ensuring fairness, and providing transparency, Lily made sure that her exploration of the cosmos was as ethically grounded as it was scientifically sound.