Let’s imagine this scenario: You’re surfing the internet on a lazy weekend, searching for a cool new pair of sneakers. A few hours later, you start noticing pop-ups for sneaker sales, emails about discounts on similar brands, and even social media ads showcasing the latest sneaker trends. You pause and wonder, “How did they know I was looking for sneakers?” It’s all about data, my friends. The websites you visit track your search history, and based on your online activities, they start offering personalized ads and discounts.

This type of scenario is more common than we realize in our digital lives. We exist in a data-driven universe where every search, every click, every online purchase, and even every meme we share is being collected, analyzed, and utilized. Our data shapes the virtual world around us, leading to personalized recommendations, targeted ads, optimized gaming experiences, and even better-curated playlists.

The importance of data ethics and statistical analysis

Imagine you’re a detective. You’ve got a pile of clues (or data) in front of you. Your job? To solve the case (or understand the data). But here’s the catch – not all clues are equal, and how you use them can change the story you’re telling.

That’s where data ethics comes into play. It’s the guiding principle in your detective work, ensuring you don’t unfairly pick and choose clues that only support your pre-determined conclusions – kind of like picking only the sprinkles you like from a bowl of rainbow sprinkles. This is what we call “p-hacking” (where analysts cherry-pick results to support their hypotheses), a no-no in our detective world. Similarly, the choice of model or method can bias the analysis if it’s not appropriately aligned with the characteristics of the data or the problem at hand. For example, a survey is a great tool if you want to know people’s opinions on specific topics; it is not the best method if you want to understand the reasons underlying these opinions.

Data ethics can significantly influence how we prepare our models. If not conducted ethically, model evaluation may not fully disclose the limitations and potential biases in the model, leading to overconfidence in its predictions or conclusions.

Apply data ethics when performing statistical analysis and machine learning modeling

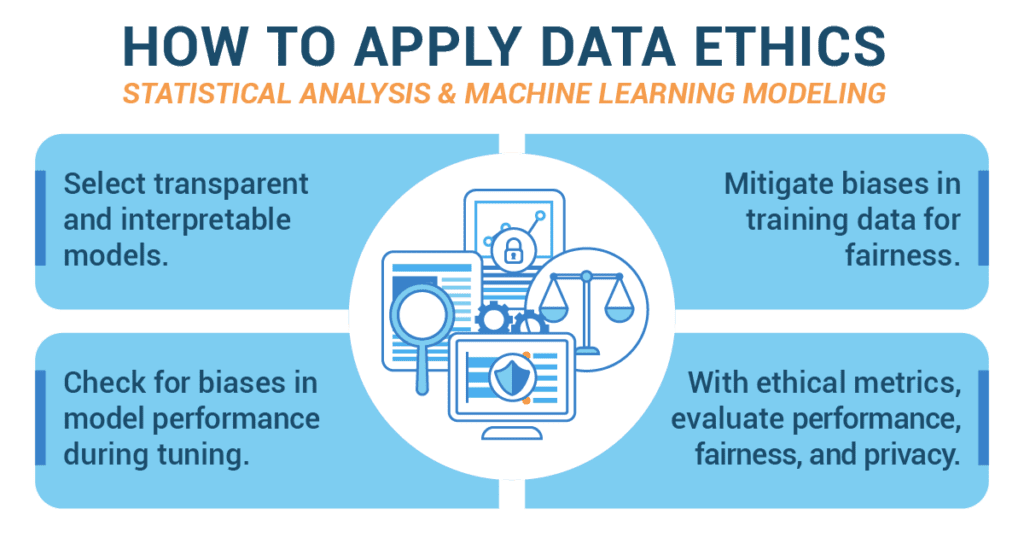

- Choose models that can provide transparency and interpretability.

Just like detectives follow a code of conduct, we data detectives also have a framework to guide us: FATML, which stands for Fairness, Accountability, and Transparency in Machine Learning. We have to choose our models and methods thoughtfully, ensuring they’re fair, explainable, and do not favor one group over another. Ensure that the selected models can be explained to the stakeholders.

- Ensure fairness by critically analyzing and processing the training data to mitigate any existing biases.

There are cool tools available, like IBM’s AI Fairness 360 or Google’s TensorFlow Privacy, to help us along the way. These tools help us examine our evidence (or data) and ensure it’s not biased. They can also help us maintain the privacy of the people behind the data – just like detectives protect their informants’ identities.

- During tuning, it’s crucial to check again for biases in the model’s performance.

Checking for bias is an ongoing process for ML models, researchers use techniques like differential privacy to monitor their models and protect the privacy of individuals in the dataset. Just like a detective would review the facts before presenting the case, we check our models’ performance for biases.

- Use metrics that take into account not just model performance, but also ethical considerations like fairness and privacy.

Common data ethics mistakes and countermeasures for statistical analysis and machine learning

- Ignoring biases in data

- They might focus too much on one clue and miss other important pieces of information. Similarly, in data analysis, we may ignore biases in our data, leading to unfair conclusions.

- Countermeasure: To fix this, we perform a thorough bias-audit of the dataset and mitigate biases where possible through techniques like re-sampling or re-weighting.

- Not considering the implications of model use

- Failing to consider how the model will be used can lead to harmful outcomes, especially if the model is used in high-stakes decisions.

- Countermeasures: Engage in a thorough assessment of the potential impacts of the model, including consulting with affected communities if possible.

- Lack of transparency

- Models that are too complex to understand can create mistrust and make it difficult to identify when they’re making unfair or incorrect predictions.

- Countermeasure: Prioritize transparency and interpretability in model selection. Use tools like SHAP (SHapley Additive exPlanations) to help explain complex models.

Ethical Analysis in the Cosmetics Data Project

In the realm of cosmetics and beauty, Jessica Reynolds, a seasoned corporate professional, embarked on a data project with a dual purpose: to harness data-driven insights to create innovative cosmetic products while upholding the highest standards of data ethics. Her journey involved navigating the complexities of data analysis while ensuring that every decision was aligned with ethical considerations.

Jessica’s project was ambitious – to leverage data analytics to formulate cosmetic products that catered to diverse skin types and preferences. However, she understood that the world of cosmetics was deeply personal, and the ethical implications of data analysis couldn’t be overlooked. During the analysis stage of the project, Jessica implemented a range of ethical practices to ensure that the data insights were used responsibly, respecting individual autonomy and safeguarding user interests.

Understanding the potential implications of data analysis, Jessica carefully reviewed every aspect of her analysis to ensure that the results weren’t misrepresentative or harmful. She recognized that a skewed analysis could lead to unjustified conclusions, potentially impacting product formulation and user perception. Jessica was well aware of the potential for biases in data analysis, especially when it came to beauty and cosmetics. She worked closely with her data science team to develop algorithms that could detect and mitigate biases in product recommendations. Her approach was rooted in a commitment to fairness and inclusivity.

Drawing on her corporate acumen, Jessica emphasized the importance of user-centric insights. She ensured that the analysis not only captured general trends but also focused on individual preferences. By respecting and prioritizing individual choices, she aligned her analysis with ethical considerations that valued diversity. Jessica recognized the significance of transparency in the world of cosmetics. She ensured that the analysis methodologies were clearly communicated to stakeholders, from company executives to consumers. This transparency not only built trust but also held her accountable for the decisions made based on the analysis.

Applying her understanding of privacy concerns, Jessica implemented strict protocols to protect user privacy during analysis. She ensured that any individual identifiers were removed, and the analysis only focused on aggregated data to prevent any re-identification. Beyond the immediate project goals, Jessica saw an opportunity to educate consumers about the ethical implications of data analysis in cosmetics. She organized seminars and webinars to discuss the benefits and potential pitfalls of data-driven beauty solutions, ensuring that consumers were empowered to make informed decisions.

As the analysis phase concluded, Jessica’s ethical approach had a lasting impact on the project. The cosmetic products developed were not only effective but also a testament to ethical principles that put individual choices and privacy at the forefront. Her case study exemplifies the harmonious blend of innovation and ethics, showcasing how corporate expertise can drive positive change while upholding ethical standards. Jessica Reynolds’ journey through the world of cosmetics, enriched by her corporate background, stands as a testament to the power of ethical data analysis. Her case study underscores the importance of maintaining ethical considerations in data projects, especially in industries as personal as beauty and cosmetics.