In our everyday lives, we encounter mysteries and unanswered questions that pique our curiosity. Whether it’s deciding which route will get us home fastest or wondering if our favorite sports team will win the championship, we constantly find ourselves making assumptions and seeking answers. Little do we know that within these ordinary experiences lies a remarkable tool called hypothesis testing, which holds the key to uncovering the truth and transforming the way we understand the world around us.

Hypothesis testing surrounds us in various forms. It empowers us to make evidence-based decisions, ask the right questions, and separate fact from fiction. By delving into the world of hypothesis testing, we can become detectives in our own lives, seeking the truth and unraveling the mysteries that lie before us, from choosing the best smartphone on the market to evaluating the effectiveness of a new workout routine.

What is a hypothesis test?

A hypothesis test is like a special detective toolkit that lets us use a small piece of the puzzle (sample data) to guess what the whole picture (population) might look like. Think of it like trying to guess what your whole department likes for lunch by asking a few of your colleagues. You’re using a small group (sample data) to guess the preferences (inferences) of everyone in your department (population). One interesting thing about a hypothesis test is that it doesn’t just help us make these guesses—it also tells us how sure we can be about them! This is the role of a p-value, a measure of confidence.

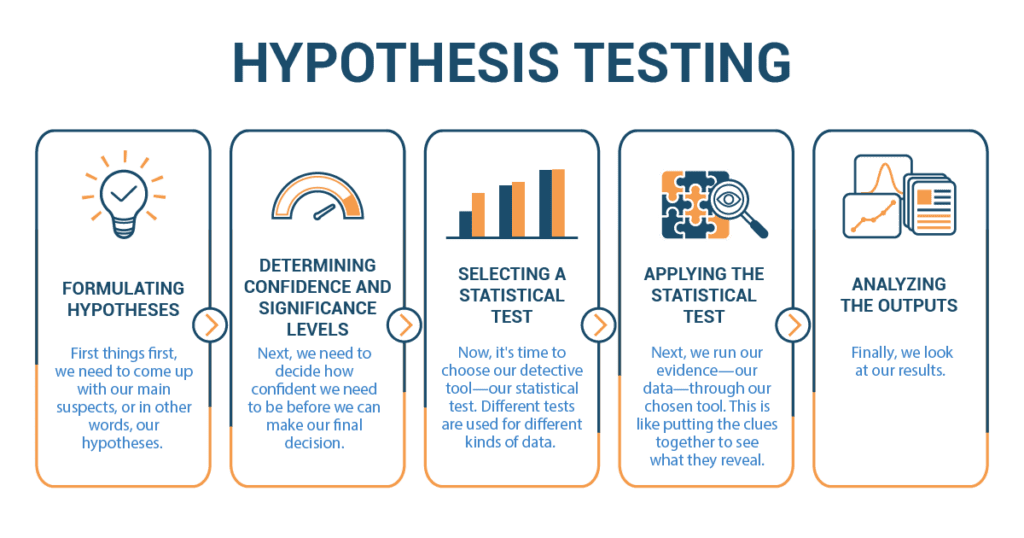

The steps in hypothesis testing

Formulating Hypotheses

To begin, we need to establish our primary assumptions or, in corporate terms, our hypotheses. Imagine we’re trying to ascertain if more employees prefer virtual meetings over in-person ones. Our null hypothesis (H0) would be “Employees prefer in-person meetings or have no preference,” while our alternative hypothesis (H1) would be “Employees prefer virtual meetings.”

Determining Confidence and Significance Levels

Subsequently, we must determine the confidence level required to make our final assessment. If we set a confidence level of 95%, it implies we’re comfortable with a 5% margin of error.

Our significance level, on the other hand, determines the threshold of evidence needed before we conclude that virtual meetings are favored. With a significance level of 5%, we’re indicating that a p-value below 5% (commonly represented as .05) is needed to ascertain that virtual meetings are the preferred mode.

Selecting a Statistical Test

With our hypotheses in place, we must decide on our analytical tool—our statistical test. The choice of test varies based on the nature of the data. For this scenario, we might opt for a chi-square test, suitable for comparing observed and anticipated data—like the number of employees who prefer virtual meetings against our expectations.

Applying the Statistical Test

With our test selected, we process our data, akin to analyzing feedback to extract insights.

Analyzing the Outputs

Upon examining our results, if the p-value is below our significance level (5%), we have compelling evidence to suggest that virtual meetings are indeed the preferred mode for employees.

Hypothesis testing is fundamentally about forming educated assumptions and then determining the probability of their accuracy. It’s not a definitive answer, but it’s an invaluable tool for deciphering patterns in corporate data. So, don your analytical cap, and let’s embark on our next data-driven exploration!

Corporate Performance Enhancement: A Case Study

Meet Alex, a middle manager and the head of a department in a multinational corporation. Like all leaders, Alex is constantly exploring avenues to boost her team’s productivity and efficiency. She recently came across a study suggesting that teams incorporating mindfulness practices into their routines experienced improved decision-making skills. Intrigued, Alex pondered if integrating mindfulness sessions into her team’s workflow could enhance their performance.

Alex’s hypothesis was: “Introducing mindfulness sessions into our routine will enhance the team’s decision-making skills.”

Aware that a hypothesis is simply a well-informed supposition that requires validation, Alex initiated a research project within her department. She gathered data on the team’s decision-making efficiency for three months, during which no mindfulness practices were introduced. This provided her with a benchmark for comparison.

Subsequently, she introduced a 15-minute mindfulness session at the start of every workday for the next three months. Monthly, she assessed the team’s decision-making efficiency.

But Alex’s exploration didn’t end there. She realized that her endeavor mirrored what corporate analysts and researchers undertake regularly. They postulate a potential correlation or impact, then accumulate data, akin to Alex’s assessment of her team’s decision-making skills, to verify if evidence substantiates their hypothesis.

After the three-month mindfulness implementation, Alex assessed the data. She observed an enhancement in the team’s decision-making efficiency. Her colleagues also reported heightened focus and decreased stress during meetings. Although Alex recognized that her concise study didn’t conclusively validate the advantages of mindfulness for all corporate teams, the positive outcomes were promising.

In essence, what Alex executed was a rudimentary form of a hypothesis test. She formulated a hypothesis, gathered and examined data to evaluate it, and drew conclusions based on her findings.

Her initiative underscored the significance of grasping hypothesis testing in the corporate sphere: it furnished a systematic method to investigate a notion, amass evidence, and make data-driven decisions. Perhaps her insights will motivate others in the company to contemplate incorporating mindfulness into their daily routines.