Today, I want to take you on a journey, a voyage into the backbone of the digital revolution — a journey through the vibrant world of data in the machine learning process. Imagine being a detective, piecing together different clues to solve a grand mystery. Well, in the dynamic landscape of machine learning, data is your assortment of clues, your golden keys to unlocking patterns, trends, and answers that can revolutionize how we understand and interact with the world.

Data is like the rich vocabulary that a poet uses to craft vibrant stories, the palette of colors that an artist uses to paint masterpieces, offering a lens through which we can capture and depict the most intricate details of the world around us. In the machine learning process, it plays a pivotal role in ‘training’ our models, teaching them to understand, learn, and, eventually, make intelligent decisions.

As we delve deeper into this subject, we will explore how data can be both a teacher and a storyteller, guiding machines to learn from the past, analyze the present, and anticipate the future. From helping us predict weather forecasts more accurately to recommending the next cool song on your playlist, the possibilities with data are virtually endless!

The Role Data Plays in Machine Learning

Data isn’t just a part of the machine learning process; it is the heart of it, guiding each step and helping us create something truly remarkable. It’s our raw material, guide, and evaluator, helping us build solutions that can think and learn from experiences, just like humans!

- Data is the Fundamental Ingredient

Data is essential in the machine learning process, serving as the primary resource and determinant in creating intelligent learning solutions. The quality of data significantly influences the outcome of machine learning models. Like using quality ingredients in cooking, high-quality data is crucial to achieving reliable results. Poor or inaccurate data leads to ineffective models. Remember, garbage in, garbage out. - Data Specifies the Problem to be Solved

The nature of the data available often dictates the problems machine learning can address. For instance, abundant image data might direct focus toward image recognition tasks. The types of data collected, such as images of cars and bikes, guide the creation of models for specific recognition tasks.. - Data Determines the Type of Machine Learning

The kind of data we have also helps us decide the best learning method. Sometimes, we have clear examples to learn from, which is called supervised learning. Other times, we have to find hidden patterns without any examples, known as unsupervised learning. Then there’s a special style where the model learns from its mistakes, just like you learn a video game, getting better each time, known as reinforcement learning. - Data Dictates the Feasibility and Complexity of the Project

The size and complexity of a dataset can affect the project’s intricacy. Large and complex datasets require more advanced methods and increased computational resources, much like solving a complex puzzle requires more time and effort. - Data Drives Model Evaluation and Tuning

Different data subsets, such as training, validation, and testing data, are critical in evaluating and refining machine learning models. This process is essential for tuning model parameters and ensuring the model’s generalization capability. - Data Influences Model Update and Maintenance

Machine learning models require ongoing updates and adjustments with new data to remain effective, similar to the continual care needed in gardening.

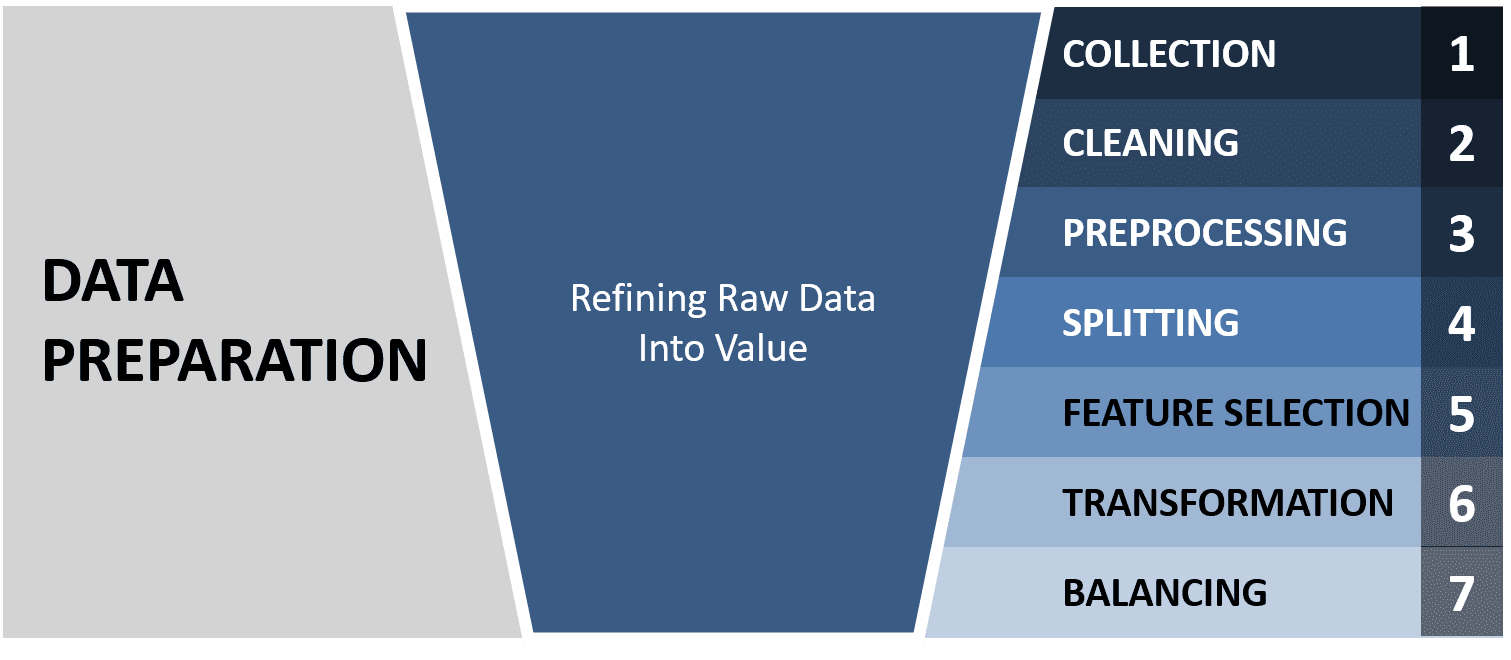

The Steps in the Data Preparation Stage

Data preparation is a critical stage in machine learning, involving several key steps that must be handled carefully and precisely.

- Data Collection

This stage involves gathering essential information from various sources, including websites, files, APIs, or databases. The data collected can be numerical, categorical, or textual, providing diverse inputs for machine learning models. - Data Cleaning

At this stage, the collected data is cleaned by removing errors, correcting inconsistencies, and ensuring a refined dataset is prepared for further processing. - Data Preprocessing

Cleaned data is then processed to make it interpretable by machine learning models. This involves converting different forms of data into a format suitable for modeling, such as numerical representation. - Data Splitting

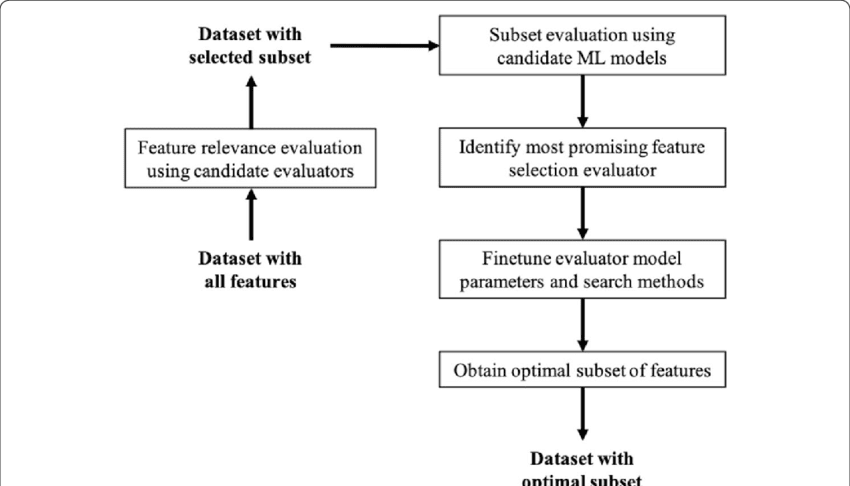

The processed data is divided into two parts: a training dataset for teaching the model and a testing dataset for evaluating its performance. This ensures the model learns to recognize patterns rather than just memorizing data. - Feature Selection

This step involves identifying and selecting the most relevant features or variables in the data that significantly contribute to the predictive performance of the model. - Data Transformation

Data is further refined through transformations to simplify and optimize the training process. This step involves modifying and standardizing the data to facilitate effective model learning.

- Data Balancing

To prevent biases in the machine learning model, the data is balanced to ensure equal representation of different categories or classes. This helps in creating a fair and unbiased model.

Jasmine’s Book Collection Dilemma

Jasmine has a vast collection of books, from mystery novels to science textbooks. But as her collection grew, it became increasingly difficult for her to manage and find the book she wanted at any given time. Imagine if Jasmine could take a picture of her bookshelf with her phone, and an app could tell her exactly where each book is located.

Now, to make this app work, Jasmine needs something very important: data. In the world of machine learning, data is like the fuel that powers a car; without it, we won’t get very far. But what kind of data does she need?

First, she would collect photos of her bookshelf, taken from different angles and various lighting conditions. These photos will help the app learn what different books look like on her shelf. This collection of photos is her “data.”

But it doesn’t stop there! Jasmine also writes down the exact location of each book in every photo, like a map guiding to a treasure. This detailed information, paired with the photos, is what we call “labeled data.”

By feeding this labeled data into her machine learning app, it learns to recognize patterns — for example, the distinctive spine of a book or a specific color pattern that indicates the book’s location. Over time, with enough data, the app can get pretty good at finding any book Jasmine is looking for, all on its own!