Imagine you’re cooking a grand feast for a special occasion. 👩🍳🥗🎉You’ve handpicked a recipe, shopped for the finest ingredients 🥩🌟, and set aside ample time to prepare. Now, would you knowingly pick bruised apples for your apple pie or use expired milk for your creamy mashed potatoes? Of course not! 🚫The quality of the ingredients you use directly impacts the taste and overall success of your meal.

Now, let’s replace the word ‘cooking’ with ‘data analysis,’ ‘recipe’ with ‘methodology,’ and ‘ingredients’ with ‘data.’ Suddenly, you’re no longer just a chef in your kitchen but a data analyst in the vast realm of information. Like in cooking, the quality of your data will influence your analysis, the insights you derive, and the decisions you make based on those insights.

Why is Poor-Quality Data Dangerous in Our Investigation?

If we accidentally collect the same information twice (duplicate data), such as counting the same pizza crumb twice, we could make some results seem more important than they are, which could lead us astray.

Finally, data that doesn’t make sense (invalid data), like a clue from a pet who couldn’t possibly eat the pizza, can change the real picture the data is trying to show us, leading us to wrong conclusions.

Ensuring Good Quality Data

Just like in our detective story, we need to double-check our clues to make sure they’re reliable.

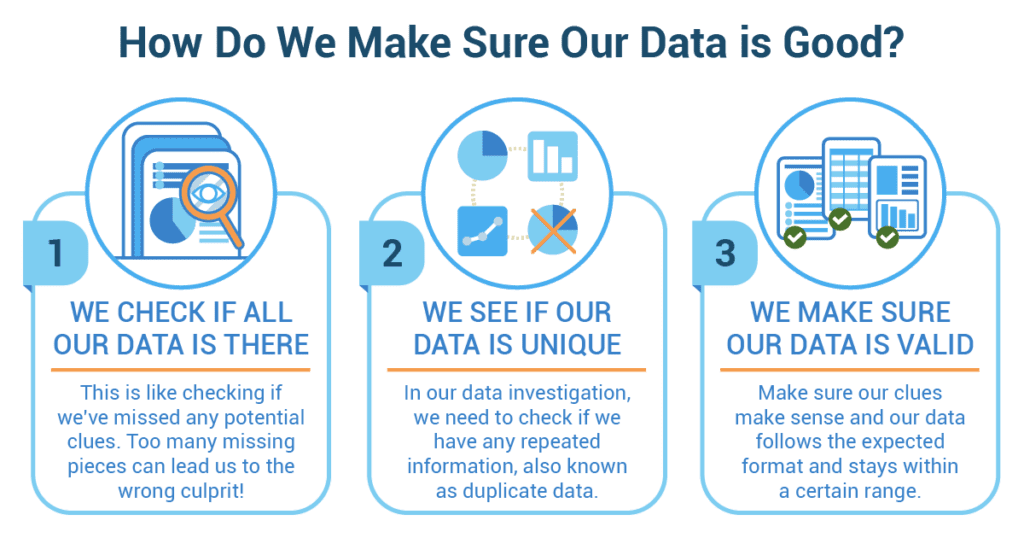

- We check if all our data is there

This is like checking if we’ve missed any potential clues, like forgetting to ask someone in the house about the pizza. If some data is missing, we need to know how much is missing. Too many missing pieces can lead us to the wrong culprit! - We see if our data is unique

Imagine if we counted the same crumb twice, making it seem like a bigger mess was left behind than there really was. In our data investigation, we need to check if we have any repeated information, also known as duplicate data. - We make sure our data is valid

This means making sure our clues make sense. If we found fish scales at the scene, but no one in the house had been near a fish, that clue (or data) would not be valid. In the same way, we have to make sure our data follows the expected format and stays within a certain range. Remember, being a data detective is a lot like solving a mystery. You need good, reliable clues to find the right answer. So, always make sure your data is complete, unique, and valid before jumping to any conclusions!

Lily’s Cosmic Data Quest: A Journey to the Stars

The Kepler Mission, a NASA initiative, provided an ample dataset with thousands of exoplanets and their unique characteristics. With a twinkle in her eyes, Lily downloaded the dataset, eager to decipher the cosmic stories hidden within.

However, she remembered her statistics teacher’s emphasis on the significance of data quality before jumping into any exploratory data analysis. It was a valuable lesson she equated with her favorite astronaut, Chris Hadfield, double-checking his spaceship’s equipment before a mission.

Lily started by checking if any data was missing. She noticed that some exoplanets lacked crucial details, like radius or orbital period. This was like trying to draw a planetary system with some planets invisible. She realized that including these in her analysis could skew her results and present an incomplete picture of the cosmos. She marked these entries and decided to exclude them from her analysis.

Next, Lily sought duplicates. Just as two identical aliens in her dataset would falsely suggest a Galactic Federation meeting, duplicate exoplanet entries could overstate their prevalence. Using her software, she identified and removed any duplicates, ensuring the uniqueness of her cosmic data.

Finally, she moved on to validate her data. One entry caught her attention—an exoplanet with an orbit time of mere minutes. Recalling her space documentaries, she realized that such a short orbital period was nearly impossible under known physics. This was her invalid data—a misfit in the celestial choir. With a quick adjustment, she made sure the data adhered to realistic values.

When Lily finally started her analysis, she had full confidence in her dataset. Her teacher was impressed not only with her fascinating findings about exoplanet types but also with her meticulous preparation. Her peers applauded, but Lily was already lost in her thoughts, gazing at the stars and imagining the undiscovered worlds her quality-assured data had hinted at.

Through this voyage, Lily didn’t just explore the exoplanets. She also discovered the importance of assessing data quality, a lesson she’d carry with her on every future cosmic data quest.