Imagine for a moment that you’ve just purchased a state-of-the-art coffee machine. You’ve seen these machines churn out the perfect brew in your favorite cafes, yet when you press the button for the first time, the outcomes are not quite what you expected. That machine, as sophisticated as it might be, requires a calibration period. It needs to understand the specific type of beans you’re using, the exact amount of water, and the precise brewing time that’ll make your morning cup just right for you.

In many ways, our minds operate similarly. We learn from experience, adjust to new data, and fine-tune our responses to make better decisions in the future. What if I told you that there’s a digital realm where machines are trained to learn, adjust, and fine-tune, just like us? Welcome to the world of Google’s Teachable Machine.

Now, I know most of you might be wondering, ‘How does this relate to me, to our businesses, to our day-to-day?’ Believe it or not, the art and science of training these digital entities have parallels in everything we do, from that morning coffee ritual to making crucial corporate decisions.

The Magic Behind Training on Google’s Teachable Machine

The art of teaching a machine may sound like something out of a sci-fi novel, but in today’s rapidly advancing digital age, it’s becoming an everyday reality. Have you ever wondered what exactly happens when you hit the ‘Train’ button on Google’s Teachable Machine? It’s a symphony of data, algorithms, and iterative learning – all tucked away neatly behind a simple user interface.

Behind the simplicity

Whenever you train a model on Google’s Teachable Machine, know that a plethora of complex machine learning processes are diligently working in the background, making the experience smooth for you.

Initializing

Imagine handing a blank sheet of paper to a child. It symbolizes potential. Similarly, our model, at the outset, is like an eager apprentice waiting to absorb knowledge, knowing little to nothing about the world.

Feeding information

It’s time to provide our young apprentice with some examples. We present them with data, like sunset images, and declare, “This, dear apprentice, is what a sunset looks like.”

The trial and error phase

With limited information, the model, much like a toddler trying to identify shapes, will make mistakes. And that’s okay! Mistakes are the stepping stones to learning.

Learning from mistakes

Every error our model makes is an opportunity. With every incorrect guess, it refines its understanding, tweaking internal parameters, inching closer to accurate recognition.

Iteration is key

As the model is exposed to more and more examples, it sharpens its skills. Every new image, sound, or piece of data is a new lesson, fortifying its understanding.

Testing

Once our model has been trained on plenty of examples, it’s time for a test. We present it with new, unseen data to ensure it’s genuinely grasped the concept and isn’t merely parroting back memorized answers.

Completion

After rigorous training and consistent performance on tests, our model is ready. It has now graduated from a naive learner to a seasoned identifier, capable of making predictions with confidence.

All in your browser: The modern marvel

Perhaps one of the most astounding aspects of Teachable Machine is its location. All this intricate learning happens right in your browser. It’s like hosting a transformative classroom lesson, all within the confines of your personal computer.

Crafting Intelligence: A Guide to Google’s Teachable Machine

Imagine crafting an intelligent system, almost as if you’re nurturing a young mind to recognize and interpret the world around it. Google’s Teachable Machine offers this wondrous opportunity in a surprisingly intuitive manner. So, how do you transform this digital blank canvas into an adept machine learner? Let’s dive in!

1. Selecting a project type

Your first step is akin to choosing a subject in school. Decide whether you want your model to specialize in images, audio, or poses. Each choice offers a unique challenge and a distinct journey.

2. Providing data (samples)

Just as we learn from textbooks and lectures, our model learns from samples. The type of samples depends on the chosen project type:

- Image Model: Imagine you’re teaching someone to recognize objects. You’ll show them pictures, right? Here, you’ll upload or use your webcam to capture images for each category.

- Audio Model: Think of this as teaching someone to recognize sounds or words. You’ll provide the model with short audio snippets for each category.

- Pose Model: This is like teaching someone to understand body language. You’d use videos or images showcasing specific poses.

3. Labeling data

Labels act as ‘names’ or ‘tags’ for each sample. It’s like telling a child, “This picture? That’s a cat.” or “This sound? That’s a guitar playing.” For each sample, whether it’s an image of a fluffy feline or a chirping bird’s audio, you give it a label.

4. Training the model

With your samples labeled, hit the “Train Model” button. Now, the real magic happens. Within moments, right in your browser, Teachable Machine helps the model identify patterns, almost like connecting dots, enabling it to make informed predictions.

5. Testing and evaluating

No learning is complete without tests. And here:

- With an Image Model, introduce new images using your webcam.

- For the Audio Model, utter words or play sounds.

- For the Pose Model, strike a pose in front of the webcam.

For each, the model will give its best guess based on its training.

6. Iterative refinement

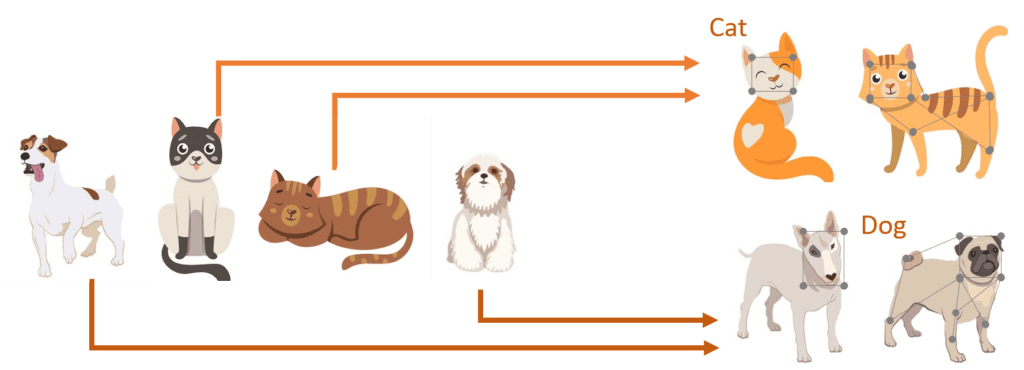

Did the model misidentify your cat as a dog? No worries! Machine learning thrives on iteration. Add more samples, tweak labels, refine categories, and retrain. With each loop, your model becomes sharper and wiser.

7. Exporting the model

Once you’re beaming with pride at your model’s prowess, export it. Like setting an apprentice to the real world, this allows your model to venture outside, ready to be integrated into apps, websites, or other platforms.

Learn more about training a model with Google’s Teachable Machine here.

Best Practices in Teachable Machine

Ah, the digital age! Where you can tutor a machine to recognize a cat from a dog, a violin from a guitar, or any other delightful differentiation you can conceive. Google’s Teachable Machine brings the realm of machine learning right to your fingertips. But as with any tool, the wisdom lies not just in using it but in mastering it. So, how can one train a model effectively?

Start simple

Before you attempt a marathon, you start with a walk around the block. Similarly, initiate your Teachable Machine journey with a basic project. Perhaps distinguishing between an apple and an orange? This ensures that you can focus on understanding the platform without the intricacies of a complex task.

Avoid overfitting

Imagine a student who only reads one book, and though they know every line of it, they falter when presented with questions from another. This is overfitting in machine learning. When a model is too finely tuned to specific training data, it might falter with real-world, diverse inputs. Ensure your data is varied enough to teach the model to generalize, not just memorize.

Understand limitations

Teachable Machine is a wonderful primer to machine learning, but it’s akin to learning arithmetic before diving into calculus. Real-world machine learning can be multi-layered and intricate. So, while Teachable Machine is robust, remember that it’s a starting point.

Privacy matters

The brilliance of Teachable Machine is its commitment to privacy; everything is processed in your browser. But a word to the wise: always be judicious about the kind of data you use, especially when it can be personal or sensitive.

Iterative process

Your first painting might not be a masterpiece, and that’s okay. Similarly, your initial model might not be spot on. Refine, retrain, and test. With each iteration, like a sculptor chipping away, you’ll move closer to your desired outcome.

Stay curious

Once you’ve sailed the Teachable Machine waters, the ocean of machine learning awaits. Dive deeper and explore neural networks, natural language processing, or reinforcement learning. Let your journey with Teachable Machine be the spark that ignites a lifelong learning flame.

Navigating the Constraints: Recognizing Teachable Machine’s Limits

It’s a fascinating era for the world of machine learning. With platforms like Google’s Teachable Machine, even those new to the domain can dip their toes into the waters of AI. But as with any tool, while there is power, there are also limitations. Understanding these limits ensures that your journey with Teachable Machine is both fruitful and informed.

Limited model types

Teachable Machine shines when it comes to classification tasks – identifying images, discerning audio, and pinpointing poses. However, the vast universe of machine learning is not just about classification. Tasks such as regression (predicting values), clustering (grouping similar items), or generative models (creating new content) are beyond its reach. Recognizing this ensures you pick the right tool for the right job.

Data privacy

A unique strength of the Teachable Machine is its browser-centric design. Your data doesn’t traverse the vast expanse of the internet. Yet, caution is your ally. If you’re dabbling with personal or sensitive data, especially when sharing or exporting models, be ever vigilant.

Lack of advanced preprocessing

Before diamonds shine, they’re polished. Similarly, data often needs preprocessing before it can be used. Teachable Machine’s simplicity, while a strength, means it doesn’t dive deep into advanced preprocessing, which can be pivotal in many projects.

Limited feedback

Teachable Machine will offer you a glimpse of how your model performs, but it’s much like seeing only the tip of an iceberg. The deeper insights, the ‘whys’ of underperformance, or the roadmap to improvement are things you’ll have to navigate without the platform’s guidance.

Potential for bias

A model, in many ways, is a reflection of its training data. Feed it a skewed perspective, and it gives you a skewed understanding. The responsibility lies heavy on users to ensure their training datasets are both diverse and representative, lest the models amplify existing biases.

No support for large datasets

Dream big, but remember, the Teachable Machine prefers to carry lighter luggage. It’s not crafted to bear the weight of very large datasets. If your aspirations are grand, you might find the platform stretching at its seams.

Generalization concerns

It’s one thing for a model to excel in the controlled environment of Teachable Machine, but the real test is in the chaotic, diverse world outside. Without representative training, your model might falter when faced with broader datasets or unpredictable real-world scenarios.