Imagine you have a big pile of laundry to sort. You have different types of clothes: socks, shirts, pants, and scarves. The first time you ever did laundry, someone (maybe a parent or guardian) had to teach you how to sort these items. They would pick up an item, say a sock, and tell you, “This is a sock. Socks go in this pile.” They would repeat this process for shirts, pants, and scarves.

Now, imagine if, instead of you, there’s a robot that’s never seen clothes before. You have to teach this robot how to sort the laundry just like you were taught.

With Google’s Teachable Machine, there is a suite of modeling tasks tailored to diverse needs. Whether you’re looking to teach a machine to visually identify objects like the laundry example, decode audio nuances, or capture intricate body poses, this tool has got you covered.

Types of Modeling Tasks in Google’s Teachable Machine

Image classification

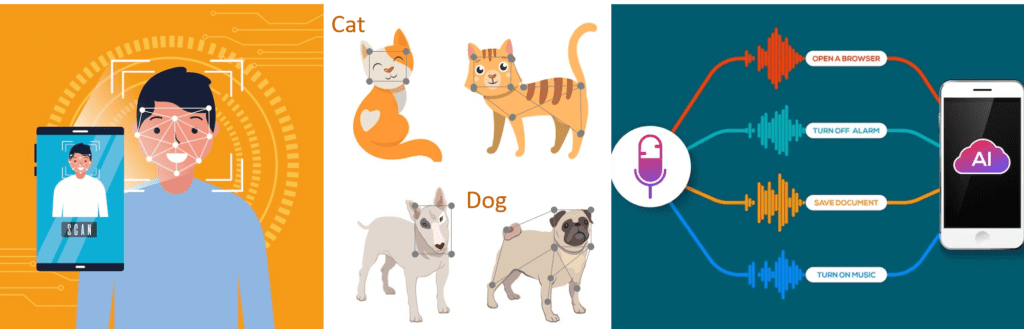

Think about how you instantly recognize a slice of pizza from a bunch of bananas. Your brain, over time, has been trained to differentiate objects, and you don’t even need to think about it. Google’s Teachable Machine does something similar with Image Classification. In Image Classification, the teachable machine takes a collection of images and learns to distinguish them into categories. Once trained, it can predict the category of new, unseen images.

- Imagine you’re running a clothing e-commerce site. You can teach the machine to categorize uploaded images into shirts, pants, or shoes, streamlining the product addition process.

Audio classification

In Audio Classification, Google’s Teachable Machine can be trained to categorize different types of sounds. This could be distinguishing between the sound of a guitar and a piano or detecting if a machinery noise indicates proper functioning or a malfunction.

- For example, a company specializing in home security might use this to alert homeowners when a specific sound pattern, like glass breaking, is detected.

Pose classification

The movement of our bodies can tell a lot about what we’re doing. Whether we’re jumping, dancing, or simply waving, every action has a unique signature. Google’s Teachable Machine can be trained to recognize these actions. Pose Classification is all about detecting and categorizing body movements. This modeling task can be extremely versatile, offering solutions ranging from healthcare to entertainment.

- A fitness app developer can utilize pose classification to assess whether users are performing exercises correctly and offer real-time feedback.

Learn more about the types of classification available here.

Selecting the Right Modeling Task in Google’s Teachable Machine

Teachable Machine by Google offers an intuitive platform for individuals to leap into the realm of machine learning without diving deep into its intricate coding maze. The question is, with multiple modeling tasks available, how does one select the most fitting one for their needs?

Image classification

Image classification is ideal when your core data is visual. This is perfect for those moments when you need to discern between different visual categories, whether they be plants, animals, or inanimate objects. The primary goal here is to build a machine that can proficiently distinguish between the different categories of images it’s been trained on, recognizing nuances in patterns, shades, and visual structures.

The fruit of your labor will be a model skilled in differentiating between the image categories you’ve provided. This tool can be seamlessly incorporated into applications that bank on image recognition capabilities. This model leans heavily on having a diverse image pool for precise categorization. Challenges arise when dealing with visually similar categories or images of inferior quality. Moreover, it’s restricted to static visuals, lacking the capability to process dynamic data like videos.

Audio recognition

When sound bites are your primary data, and you aim to differentiate between distinct sounds, musical notes, or even voice commands, audio recognition is your go-to. The mission is to instruct the machine in categorizing a spectrum of sounds, training it to detect variations in pitch, tone, and sound patterns. You’ll obtain a model that’s adept at telling apart the sound categories you’ve introduced. This can be further integrated into applications banking on sound discernment.

Just as with image classification, diversity in sound samples is crucial for pinpoint accuracy. The model may face challenges when background noises intrude or when sounds bear strong similarities. Processing extensive audio sequences efficiently also poses a hurdle.

Pose detection

If body language, gestures, or movements take center stage in your data, then pose detection is your best bet. It excels when differentiating dance forms, exercise routines, or even intricate sign language gestures. The primary aim here is to shape a machine that can expertly categorize various body movements or poses, recognizing subtle patterns and nuances in these movements. The end result is a machine trained to distinguish between distinct body movement categories, making it a valuable asset for apps centered on fitness or gesture-controlled interfaces.

The model requires a clear view of the body or the body parts in question. It might falter with intricate movements or when multiple individuals share the frame. External factors like lighting or the background can also play spoilsport in its accuracy.

Best Practices for Selecting a Modeling Task on Google’s Teachable Machine

Simplicity

Begin your Teachable Machine adventure with a task that’s straightforward and appropriate to the world of machine learning. Starting with a basic exercise, like image classification, where you discern between dogs and cats, can be invigorating. Keeping it simple initially helps in understanding machine learning’s foundational principles.

Clear objective

When we tell a machine what to do, we need to be super clear about what we give it and what we want back. Let’s say we’re teaching it about different music types like rock, jazz, and classical. We play the music (that’s what we give) and ask the machine to tell us which type it is (that’s what we want back). It’s like when we give someone easy-to-follow instructions; the clearer we are, the better they do the job. So, for our machine to work well, we need to be very clear about what we show it and what we ask from it.

Consider time, resources, and machine’s capability

Before starting a project, it’s important to check if we have everything we need and if the Teachable Machine can handle it. For example, trying to predict the weather sounds cool, but it needs a lot of information and might be too hard for beginners using Teachable Machine. It’s like setting goals we know we can reach. This way, we avoid getting frustrated and make sure we get good results. Plus, it helps us use our time and tools in the best way possible.

Seek meaningful outcomes

Picking the right project means getting results that help us understand or solve real problems. For example, figuring out feelings from what people write can help in areas like customer service or advertising. When our project makes a difference in real life, it shows how powerful machine learning can be. And when we see our work making a difference, it encourages us to keep learning and trying new things. It’s like a never-ending journey of discovery and making things better.

Pitfalls and Precautions: Selecting a Modeling Task on Google’s Teachable Machine

- Understand complexity

Imagine a student excited about recognizing faces using live videos, but he doesn’t know all the tricky parts involved. This can lead to problems because the data is too complicated or there’s not enough of it. This can make someone new to machine learning feel upset and want to give up. It’s like trying to swim across a huge lake before learning to swim in a small pool.

Fix: Starting with simpler projects, like sorting pictures or sounds, helps us understand what the Teachable Machine can and can’t do. - Data availability

Taking on a project without checking if you have the right data is like trying to bake a cake without all the ingredients. Imagine someone wanting to guess how the stock market will move, but they don’t have the right information. If the training data is bad or not enough, the predictions won’t work well.

Fix: It’s super important to make sure you have good and enough data before starting. Just like top chefs need the best ingredients to make an amazing dish. - Overlooking practical application

Creating a project without thinking about how it’ll work in real life is like building a fancy toy car that can’t be played with. This can lead to a lot of time wasted or even getting into trouble.

Fix: It’s not just about making something that works on paper; it has to make sense in the real world, too. The best projects are both smart and useful. - Ethical considerations

Choosing a project without thinking about what’s right or wrong can be risky. Imagine making a tool that guesses personal details about someone. It might sound cool, but it could upset a lot of people and even break some rules. Making mistakes like this can get you in trouble, make people upset, or make others not trust you. Doing what’s right isn’t just something we learn in school; it’s super important when working with technology, too.

Fix: Before starting a project, it’s a good idea to think about whether it’s the right thing to do and maybe even ask someone who knows more about it.

Case Study: Teachable Machines Improve Accessibility

Here are research findings about the impact of using teachable machines to improve accessibility for individuals with disabilities:

Personalization for Individuals with Disabilities: Teachable machines can empower accessibility research by enabling individuals with disabilities to personalize data-driven assistive technology. This means that users can customize the technology to suit their specific needs and conditions.

Potential for Increasing Independent Living: Machine learning, including teachable machines, holds great promise for enhancing independent living for people with disabilities.

Specific Use Case – Object Recognizers for the Blind: The concept of teachable machines is demonstrated with a concrete example where object recognizers are trained by and for blind users. This showcases the practical applications and benefits of the technology in real-world scenarios.

Read more here:

Kacorri, H. (2017). Teachable machines for accessibility. ACM SIGACCESS Accessibility and Computing, (119), 10-18.