After meticulously crafting your machine learning model, having sourced the best data, and fine-tuning its parameters, you now stand at a pivotal moment: deployment. It’s akin to a master chef who, after spending countless hours perfecting a dish, is now ready to serve it.

You’ve successfully navigated the complexities of the development process, ensuring every detail of your model is perfected. However, much like that chef’s dish, if it isn’t served, it remains a hidden gem, unseen and unappreciated by those it was crafted for.

Deploying your machine learning model is analogous to presenting that gourmet dish to diners. It’s the step that bridges the gap between intricate development and real-world application. Just as the true essence of a dish is experienced when it’s tasted, a machine learning model’s full impact is realized when it’s deployed and starts making real-world decisions.

You’ve mastered the art of creating the model; now, let’s embark on the equally crucial journey of bringing it to the world. Dive into the realm where your machine learning model transforms from a theoretical masterpiece to a practical tool. Welcome to understanding the importance of deployment.

The Art of Deployment – Bringing Machine Learning to Life

A machine learning model, despite all its intricacies and accuracy, loses its significance if it doesn’t set sail into the real world. This sail into the real world is what we call deployment, and it’s a pivotal phase that actualizes the potential of machine learning models.

Deployment completes the data science life cycle

When a machine learning model is trained, it initially lives within a local environment – your computer, perhaps. Within this cocoon, it performs excellently on datasets you’ve trained it on, demonstrating great promise. But unless it’s deployed, it can’t make predictions on real-world data. It’s like our sailboat – confined to the backyard. Deployment serves as a bridge, transitioning the model from a stage of development to a stage where end-users can interact with it. This means that a model isn’t merely a scientist’s experiment but a tool ready to take on new challenges by making predictions on fresh, real-world data.

Deployment makes machine learning models operational and practical

By deploying a model, it starts interacting with real business data. It could be predicting stock prices, recommending products, or any myriad of applications. Deployment makes these models active players in the business field, bringing tangible results. While our model might have had commendable accuracy rates during testing, it’s only when deployed that we truly see its mettle. Real-world data is often messier, more varied, and unpredictable. Through deployment, we understand the model’s performance on this new, unseen data, which can be crucial feedback.

Deployment allows the model to learn and improve over time

Once a model is deployed, its journey doesn’t end. As it interacts with new data, we can monitor its performance. This iterative cycle allows us to recalibrate and update the model, ensuring it remains relevant and accurate. Think of it as tuning the sails and rudder of our sailboat as it confronts different sea conditions.

Deployment ensures real-world applicability and value

The ultimate goal of machine learning is not to achieve high accuracy on a dataset but to solve real-world problems. By deploying a model, we ensure its applicability in real scenarios, whether that’s helping doctors diagnose diseases, assisting banks in detecting fraudulent transactions, or any other practical use case. Through deployment, the model goes beyond theoretical confines and directly influences business decisions and actions.

Deploying Your Machine Learning Model – A Step-by-Step Guide

When you hear the term ‘deployment,’ you might think of astronauts preparing for a space journey. While deploying a machine learning model might not take you to the stars, it’s undoubtedly a journey – one that involves several steps to ensure that the model functions optimally in its intended environment.

1. Choose a deployment method

Just like there’s no single correct way to solve a puzzle, there’s no one-size-fits-all deployment method. Here are the most common ones:

- Local Deployment: This is like having a home garden; everything is locally stored on your machine, making it easy to access and manage. However, it’s not accessible by others outside your machine.

- Cloud Deployment: Think of this as renting a plot in a community garden. Providers like AWS, Google Cloud, and Azure offer cloud services where models can be hosted, making them globally accessible and scalable.

- Web Service/API Deployment: This method involves encapsulating your model within a web service, allowing other software or services to interact with it via API calls.

- Deployment within a Machine Learning Pipeline: For large-scale applications, models can be integrated into more extensive pipelines, ensuring automatic retraining and seamless data handling.

Your decision here depends on the nature of your model, the intended audience, how big or small the scale of deployment is, and the resources at hand. It’s essential to weigh these factors to choose the right deployment avenue.

2. Develop and test a data layer

Imagine the foundation of a house; if it’s shaky, the entire structure can collapse. Similarly, the data layer serves as the bedrock, managing the interaction with data for predictions. A reliable data layer ensures reproducibility and efficient data handling. Without this, models might produce inconsistent results or fail due to data-related issues.

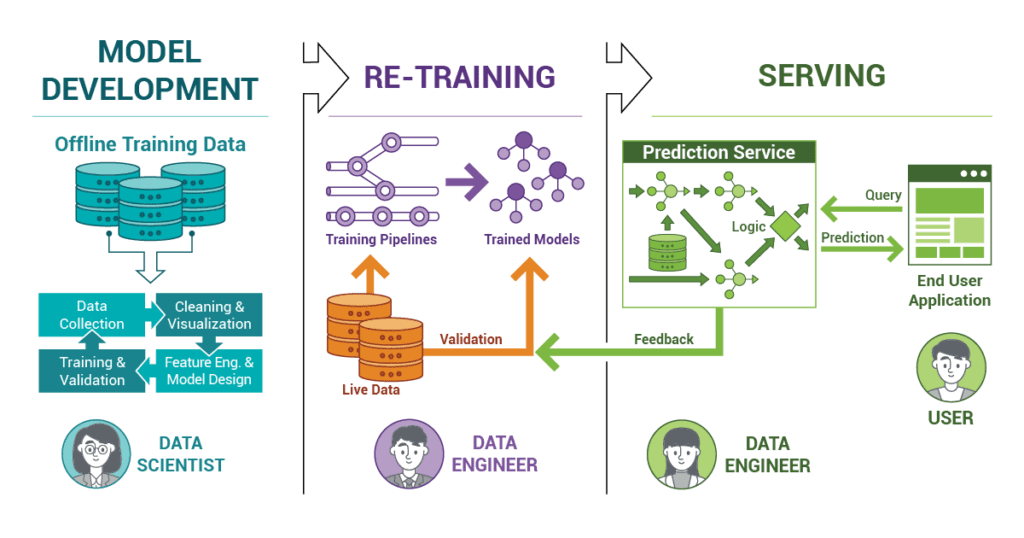

3. Develop and test a (Re-)training layer

Machine learning models undergo a training phase, much like employees undergo training sessions. In this training layer, algorithms and data converge to instruct the model in its designated functions. This layer requires careful development and testing to ensure that the model correctly learns from the data and generalizes well to unseen data. To ensure the model delivers accurate and consistent predictions, especially on new data, it’s essential to optimize and fine-tune this layer rigorously.

4. Develop and monitor a serving layer

Once the model is trained, it needs to predict on new data. The serving layer acts as this messenger, taking in fresh data, letting the model predict, and then presenting these predictions. This layer must be efficient, ensuring quick preprocessing of data, passing it to the model, and returning predictions without hiccups.

5. Design and build frontend UX/UI for a machine learning system

Ever been to a restaurant? The menu, the decor, and the ambiance constitute your experience. Similarly, the frontend is the interface users interact with when engaging with your model. The easier and more intuitive this interface is, the better the user experience. It’s where users provide inputs and where they receive and interpret the model’s predictions.

The Deployment Decision – The Cornerstone of Machine Learning Systems in Business

Imagine you’ve just purchased a high-performance sports car. Its potential is incredible, but how well it performs depends on the road you choose. Would you unleash its power on a rough, dirt road? Or on a smooth racetrack tailored for speed? Similarly, the choice of deployment method is vital for a machine learning system. Let’s dive deeper into why.

- Enhancing model performance

The deployment avenue directly impacts the model’s efficiency. The right route ensures it works optimally, like a car on the perfect track. A well-matched deployment strategy ensures consistent, accurate results, eliminating the “bumps” in performance. Just as a highway can handle more cars than a local street, some deployment methods excel at processing vast amounts of data swiftly. Selecting a deployment technique aligned with your model’s intricacies can significantly elevate its performance, much like a tailored suit fits better than a generic one. - Ensuring scalability

In the corporate world, as data volumes swell and demands shift, your machine learning system must scale without hiccups. Choosing a deployment method designed for scalability ensures that as your business grows, your system can accommodate without compromising performance. - Cost implication

Each deployment method has its price tag. While some may be economical, others, offering advanced features, might be pricier. Some deployment techniques, although powerful, might demand more computational strength or storage space, influencing the overall running costs. - Security and compliance

With varying deployment methods come varying degrees of security. As corporate data is invaluable, this choice is paramount. Depending on the sensitivity and nature of your data, certain deployment avenues ensure a tighter grip on data protection. - Flexibility and versatility

The corporate environment is dynamic. The right deployment method equips your system to quickly adjust to model updates or shifts in the industry. Having flexibility means your system can effortlessly adapt to new data patterns or evolving business needs. - Seamless integration with existing infrastructure

The deployment decision isn’t just about the machine learning model. It’s about ensuring it dovetails with existing technology, guaranteeing an uninterrupted, smooth operation.

Navigating the Deployment Landscape – Finding the Perfect Fit for Your Machine Learning Model

Choosing the right deployment method for a machine learning model is akin to selecting the perfect vehicle for a specific terrain. One must consider the nature of the journey, the challenges along the way, and the goal. So, how do you determine the most fitting deployment technique for your model and application?

- Assess your specific application needs

Start by clearly defining the goals of your machine learning model. What data will it process? What results do you expect? Draft a matrix detailing the vital features and requirements of your application. Such visual aids help in comparing deployment options highlighting strengths and weaknesses. - Consider the operational environment

Where will the model be operational? Is it a cloud? A private data center? Or maybe even a mobile device? Your operational sphere might have inherent constraints or advantages. If you’re cloud-centric, a cloud deployment seems logical. But for stringent data privacy, you might opt for on-premise servers. - Analyze scalability and performance requirements

What volume of requests will your model handle? It’s crucial to understand this to ensure seamless processing. For models processing a deluge of data, a scalable, high-performing deployment method is imperative. Use simulations to mimic varied data loads and assess how the model reacts, ensuring it won’t buckle under pressure. - Be aware of the tool and platform compatibility

Your chosen deployment method must be in sync with your tools and platforms. The developmental technologies employed can limit or expand deployment alternatives. By juxtaposing tools and platforms against potential deployment avenues, one can visually and effectively discern compatibility. - Evaluate costs

Every deployment avenue has its price, from setup costs to maintenance expenses. Understanding these costs ensures that your deployment choice remains financially viable. Leverage the Total Cost of Ownership method to gain a holistic understanding of all potential expenses. - Factor in security requirements

Some deployment avenues grant you enhanced control and, consequently, heightened security. Decipher the security level your model mandates and, by extension, your deployment method. Use this method to identify model vulnerabilities, guiding you in selecting deployment methods fortified against potential threats. - Test different deployment options

Before settling, it’s wise to dabble in multiple deployment methods, assessing their fit on a smaller scale. Evaluate how each method fares in terms of functionality, user-friendliness, and other crucial metrics. Such techniques offer hands-on experience with different deployment routes, aiding in a more informed and effective decision-making process.